This article will explain the hyperparameter tuning of KNN algorithm using the two most common methods which include the error graph and the GridSearchCV.

The k-nearest Neighbour algorithm, also known as KNN is a supervised machine learning algorithm that predicts the classification problems. This algorithm is best to use when we have a small dataset as it takes time to train on large datasets. Sometimes, while training the model, we do not get accurate results and we want to apply some hyperparameter tuning methods in order to find the optimum values of parameters in KNN to get accurate results. Well, here we will discuss the hyperparameter tuning of the KNN algorithm to get accurate results. We will use the elbow method to get the optimum value for the K and then we will also discuss the GridSearchCV.

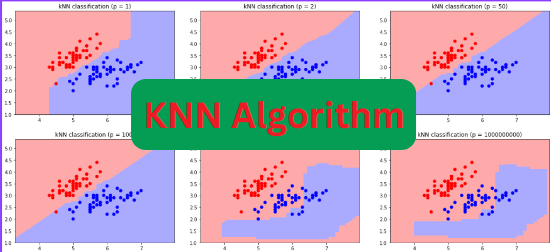

You may also like to visit the implementation of the KNN algorithm.

What is KNN in Python

KNN is known as a lazy algorithm in machine learning because it trains the model, again and again, each time we run the model. We know that the KNN works by finding the distance between the input and the historical dataset and then classifying the input to either of the class. Moreover, this algorithm can be used for both binary and multi-class classifications.

Let us assume that we have the following dataset and a testing point and we want to classify the testing data to either of the class using the KNN algorithm.

The first thing that KNN does is, it finds the distance between the input and each of the datasets using the Euclidean distance formula. Then based on the K value (which in this case is 3) will classify the input value to any of the given classes.

As you can see, because the K value was three, the algorithm is comparing the input data with the three neighboring data points and then classifies using the majority voting method. One of the biggest issues while implementing the KNN algorithm is to find the optimum value of K which is quite difficult to guess. Here we will use hyperparameter tuning of KNN using various methods to find the optimum value for the K.

First, we will just implement the KNN algorithm on a dataset and then we will try to find the optimum values for the parameters using hyperparameter tuning methods of KNN.

Importing the dataset

The most important thing in any machine learning model training is to get access to the dataset. Without data, we cannot train our model. Here we will use a preprocessed dataset and implement the KNN algorithm on it.

Let us import the dataset using the Pandas module. You can use your own dataset.

# import pandas module

import pandas as pd

# importing the dataset

data = pd.read_csv('dataset.csv')

# heading of the dataset

data.head()Output:

Age Salary Purchased

0 19 19000 0

1 35 20000 0

2 26 43000 0

3 27 57000 0

4 19 76000 0This is a binary classification problem where we have to predict whether a person will purchase the product or not based on his/her age and salary.

Once we import the dataset, the next important step is to divide the dataset into input, outputs testing, and training parts.

# dividing the dataset

X = data.drop('Purchased', axis=1)

y = data['Purchased']

# importing the train_test_split method from sklearn

from sklearn.model_selection import train_test_split

# splitting the data

X_train, X_test, y_train, y_test = train_test_split(X, y, test_size=0.30, random_state=0)We showed we have divided the dataset into 70% for the training part and the remaining 30% for the testing part.

Training and Evaluating the KNN algorithm

Once the splitting part is complete, the next step is to import the KNN model and then train the model on the training dataset. We will use the sklearn KNN class to import the KNN model. For now, we will use 3 as the K value.

# importing KNN algorithm

from sklearn.neighbors import KNeighborsClassifier

# K value set to be 3

classifer = KNeighborsClassifier(n_neighbors=3 )

# model training

classifer.fit(X_train,y_train)Once the training is complete, it is time to predict the values using the testing data points. At the same time, we will plot a confusion matrix to see the actual and predicted values of the model.

# making predictions

y_pred = classifer.predict(X_test)

# importing seaborn

import seaborn as sns

# Making the Confusion Matrix

from sklearn.metrics import confusion_matrix

# providing actual and predicted values

cm = confusion_matrix(y_test, y_pred)

# If True, write the data value in each cell

sns.heatmap(cm,annot=True)Output:

As you can see, we got some misclassified values as well. Let us print the accuracy in order to see how accurate the model is in predicting the output class.

# importing accuracy score

from sklearn.metrics import accuracy_score

# printing the accuracy score

accuracy_score(y_test,y_pred)Output:

0.757575

The accuracy of the model on default parameter values (except K which was 3), we got 75% accurate results. Now, we will see how you can use various methods to get accurate results.

Hyperparameter Tuning of the KNN Algorithm

As we discussed and implemented the KNN algorithm on all default values but it is not necessary that we will get accurate results for the default parameter values. In order to get optimum parameter values, we will use hyperparameter tuning of the KNN algorithm. Here we will mainly discuss the error rate graph method and GridSearchCV method to get optimum values for the parameters of KNN.

Error Rate graph – Hyperparameter Tuning of KNN

Many people ask questions about how to find the optimum value of K in KNN. Well, here we go. We can use the error rate graph in order to get the optimum value of K in the KNN algorithm. Understanding the error rate graph is very simple. The point, where the graph is at its lowest point represents the optimum number for the K value.

Let us first visualize the error rate graph for KNN using Python.

# import numpy

import numpy as np

import matplotlib.pyplot as plt

error_rate = []

# searching knn search value upto 40

for i in range(1,40):

# knn algorithm

knn = KNeighborsClassifier(n_neighbors=i)

knn.fit(X_train, y_train)

# testing the model

pred_i = knn.predict(X_test)

error_rate.append(np.mean(pred_i != y_test))

# Configure and plot error rate over k values

plt.figure(figsize=(10,4))

plt.plot(range(1,40), error_rate, color='blue', linestyle='dashed', marker='o', markerfacecolor='green', markersize=10)

#plotting knn graph

plt.title('Error Rate vs. K-Values')

plt.xlabel('K-Values')

plt.ylabel('Error Rate')Output:

In the figure, we can clearly see that the graph has the lowest point at 6 so we will take 6 as the optimum value for the KNN algorithm.

We will now train the model again with a K value of 6 in order to see if we can increase the accuracy somehow.

# importing KNN algorithm

from sklearn.neighbors import KNeighborsClassifier

# K value set to be 3

classifer = KNeighborsClassifier(n_neighbors=6 )

# model training

classifer.fit(X_train,y_train)Now, let us make predictions and visualize the prediction in a confusion matrix to see how well our model is at making predictions.

# making predictions

y_pred = classifer.predict(X_test)

# importing seaborn

import seaborn as sns

# Making the Confusion Matrix

from sklearn.metrics import confusion_matrix

# providing actual and predicted values

cm = confusion_matrix(y_test, y_pred)

# If True, write the data value in each cell

sns.heatmap(cm,annot=True)Output:

It seems like this time the predictions are much better than the previous one. Let us also find the accuracy to confirm it.

# importing accuracy score

from sklearn.metrics import accuracy_score

# printing the accuracy score

accuracy_score(y_test,y_pred)Output:

0.787878

As you can see, we got better results than the previous one using the error graph and finding the optimum value for the K.

GridSearchCV – Hyperparameter Tuning of KNN

GridSearchCV is a very popular method of hyperparameter tuning method in machine learning. The working of GridSearchCV is very simple. We defined the values for different parameters of the model and then the GridSearchCV goes through each of the specified values and then finds out the optimum value.

Let us implement the GridSearchCV for the hyperparameter tuning of KNN to get optimum parameter values.

# importing the gridsearchcv for hyperparameter tuning of KNN

from sklearn.model_selection import GridSearchCV

grid_params = { 'n_neighbors' : [5,7,9,11,13,15],

'weights' : ['uniform','distance'],

'metric' : ['minkowski','euclidean','manhattan']}

# initializing the hyperparameter tuning of KNN

gs = GridSearchCV(KNeighborsClassifier(), grid_params, verbose = 1, cv=3, n_jobs = -1)

# fit the model on our train set

g_res = gs.fit(X_train, y_train)

# get the hyperparameters with the best score

g_res.best_params_Output:

{‘metric’: ‘minkowski’, ‘n_neighbors’: 11, ‘weights’: ‘uniform’}

As you can see, we got the optimum values for the specified parameter values. Now, you can use these parameter values to train the model again and test the testing data.

Another way to get how accurate your model is on the given data is to use find the accuracy of the optimum model found by the GridSearchCV method.

# find the best score

g_res.best_score_Output:

0.80086

As you can see, this time we even got better results. In a similar way, you can use the methods to get optimum values for the parameters of KNN in order to increase the accuracy of the model.

Summary

Hyperparameter tuning of KNN is using various methods to get optimum values for the given parameters to get accurate results. In this short article, we implemented the KNN algorithm with all its default values on the given dataset. Then we used an error graph to get the optimum value for the K which increased our accuracy slightly. Then we used the GridSearchCV method to increase the accuracy and get optimum values for the given parameters.

If you have any questions or suggestions, please let us know through comments.